#

📌 Perceptron이란?

- 1957년 프랭크 로젠브라트가 고안한 최초의 인공신경망 모델 중 하나.

- 생물학적 뉴런을 수학적으로 모델링한 인공 뉴런으로, 여러 입력 신호를 받아 각각의 가중치를 곱한 후 이를 합산하여, 활성화 함수를 통해 단일 신호를 출력한다.

- 퍼셉트론의 출력은 신호 유무 (1 또는 0)로 표현된고, 이진 분류 문제 해결에 효과적이다.

📝 Perceptron AND_GATE 실습

🔍 AND 게이트 모델 훈련 후 결과 확인

import numpy as np

import matplotlib.pyplot as plt

class Perceptron:

def __init__(self, input_size, lr=0.1, epochs=10):

self.weights = np.zeros(input_size)

self.bias = 0

self.lr = lr

self.epochs = epochs

self.errors = []

def activation(self, x):

return np.where(x >= 0, 1, 0)

def predict(self, x):

linear_output = np.dot(x, self.weights) + self.bias

return self.activation(linear_output)

def train(self, X, y):

for epoch in range(self.epochs):

total_error = 0

for x1, target in zip(X, y):

prediction = self.predict(x1)

update = self.lr * (target - prediction)

self.weights += update * x1

self.bias += update

total_error += int(update != 0)

self.errors.append(total_error)

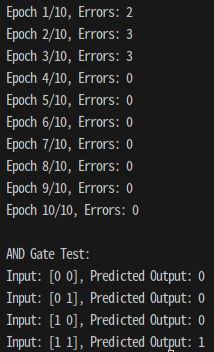

print(f"Epoch {epoch+1}/{self.epochs}, Errors: {total_error}")

# AND 게이트 데이터

X_and = np.array([[0,0],[0,1],[1,0],[1,1]])

y_and = np.array([0,0,0,1])

# 퍼셉트론 모델 훈련

ppn_and = Perceptron(input_size=2)

ppn_and.train(X_and, y_and)

# 예측 결과 확인

print("\nAND Gate Test:")

for x in X_and:

print(f"Input: {x}, Predicted Output: {ppn_and.predict(x)}")

💡 출력 결과

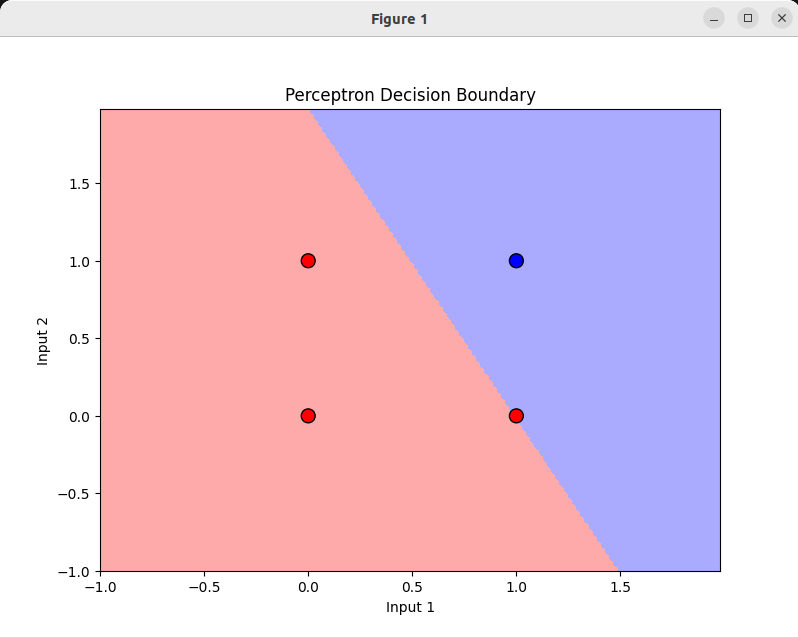

🔍 AND 게이트 결정 경계 시각화

from matplotlib.colors import ListedColormap

def plot_decision_boundary(X, y, model):

cmap_light = ListedColormap(['#FFAAAA', '#AAAAFF'])

cmap_bold = ListedColormap(['#FF0000', '#0000FF'])

h = .02 # mesh grid 간격

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h),

np.arange(y_min, y_max, h))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.figure(figsize=(8, 6))

plt.contourf(xx, yy, Z, cmap=cmap_light)

# 실제 데이터 포인트 표시

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold,

edgecolor='k', s=100, marker='o')

plt.xlabel('Input 1')

plt.ylabel('Input 2')

plt.title('Perceptron Decision Boundary')

plt.show()

# AND 게이트 결정 경계 시각화

plot_decision_boundary(X_and, y_and, ppn_and)

💡 출력 결과

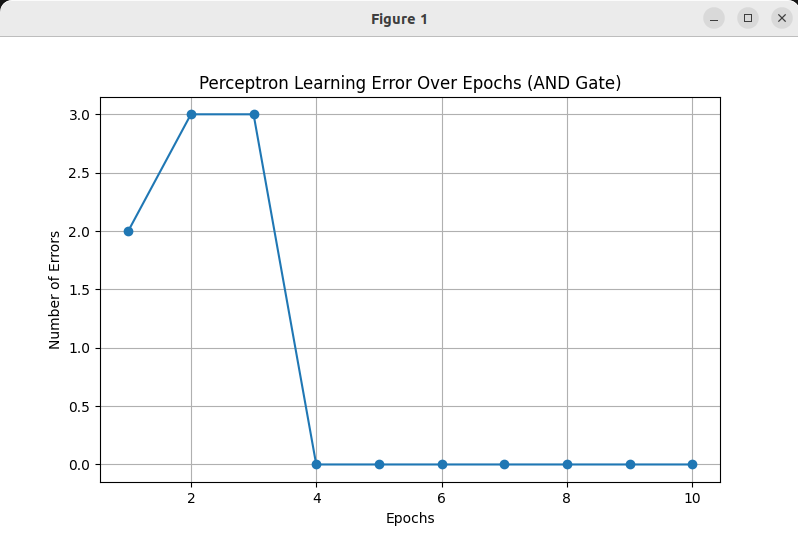

🔍 오류 시각화

plt.figure(figsize=(8, 5))

plt.plot(range(1, len(ppn_and.errors) + 1), ppn_and.errors, marker='o')

plt.xlabel('Epochs')

plt.ylabel('Number of Errors')

plt.title('Perceptron Learning Error Over Epochs (AND Gate)')

plt.grid(True)

plt.show()

💡 출력 결과

📝 Perceptron OR_GATE 실습

🔍 OR 게이트 모델 훈련 후 결과 확인

import numpy as np

import matplotlib.pyplot as plt

class Perceptron:

def __init__(self, input_size, lr=0.1, epochs=10):

self.weights = np.zeros(input_size)

self.bias = 0

self.lr = lr

self.epochs = epochs

self.errors = []

def activation(self, x):

return np.where(x >= 0, 1, 0)

def predict(self, x):

linear_output = np.dot(x, self.weights) + self.bias

return self.activation(linear_output)

def train(self, X, y):

for epoch in range(self.epochs):

total_error = 0

for x1, target in zip(X, y):

prediction = self.predict(x1)

update = self.lr * (target - prediction)

self.weights += update * x1

self.bias += update

total_error += int(update != 0)

self.errors.append(total_error)

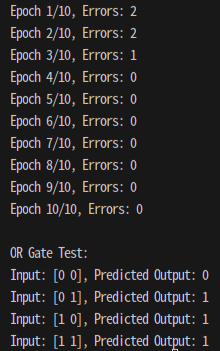

print(f"Epoch {epoch+1}/{self.epochs}, Errors: {total_error}")

# OR 게이트 데이터

X_or = np.array([[0,0],[0,1],[1,0],[1,1]])

y_or = np.array([0,1,1,1])

# 퍼셉트론 모델 훈련

ppn_or = Perceptron(input_size=2)

ppn_or.train(X_or, y_or)

# 예측 결과 확인

print("\nOR Gate Test:")

for x in X_or:

print(f"Input: {x}, Predicted Output: {ppn_or.predict(x)}")

💡 출력 결과

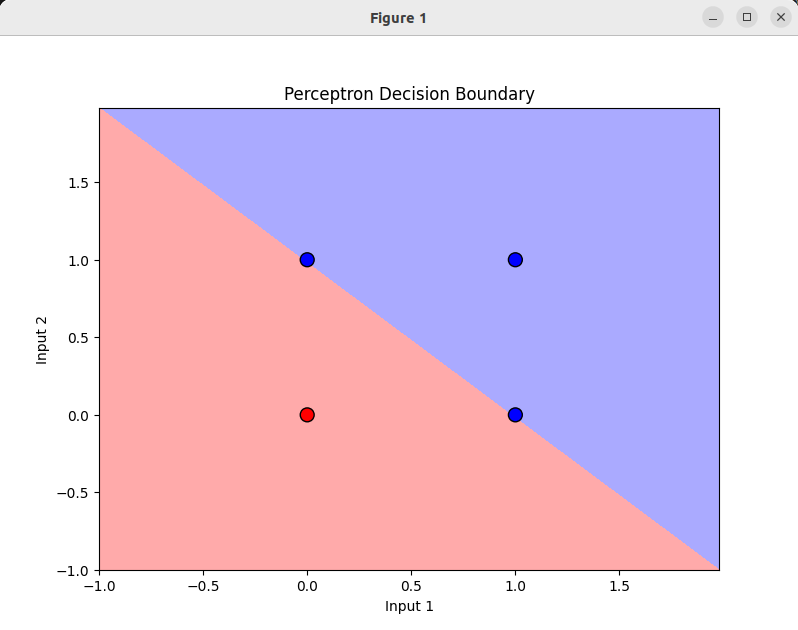

🔍 OR 게이트 결정 경계 시각화

from matplotlib.colors import ListedColormap

def plot_decision_boundary(X, y, model):

cmap_light = ListedColormap(['#FFAAAA', '#AAAAFF'])

cmap_bold = ListedColormap(['#FF0000', '#0000FF'])

h = .02 # mesh grid 간격

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h),

np.arange(y_min, y_max, h))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.figure(figsize=(8, 6))

plt.contourf(xx, yy, Z, cmap=cmap_light)

# 실제 데이터 포인트 표시

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold,

edgecolor='k', s=100, marker='o')

plt.xlabel('Input 1')

plt.ylabel('Input 2')

plt.title('Perceptron Decision Boundary')

plt.show()

# OR 게이트 결정 경계 시각화

plot_decision_boundary(X_or, y_or, ppn_or)

💡 출력 결과

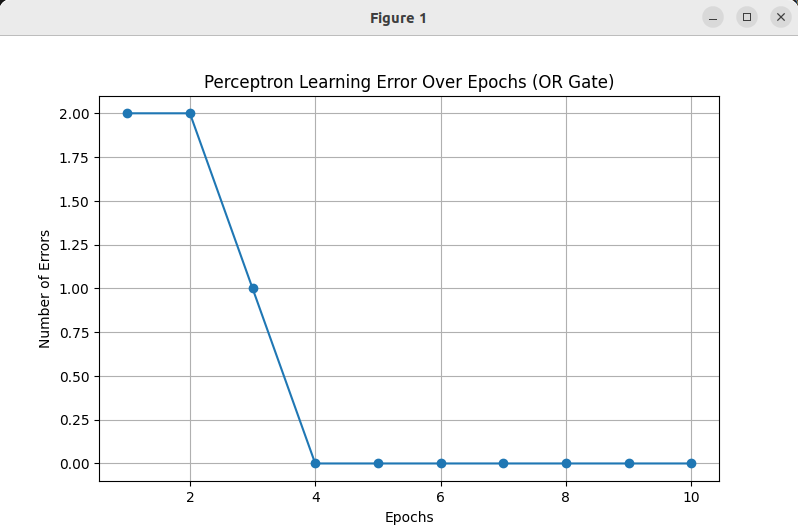

🔍 오류 시각화

plt.figure(figsize=(8, 5))

plt.plot(range(1, len(ppn_or.errors) + 1), ppn_or.errors, marker='o')

plt.xlabel('Epochs')

plt.ylabel('Number of Errors')

plt.title('Perceptron Learning Error Over Epochs (OR Gate)')

plt.grid(True)

plt.show()

💡 출력 결과

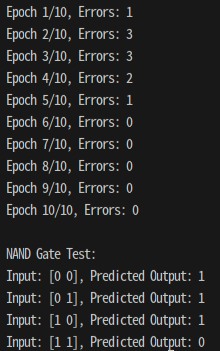

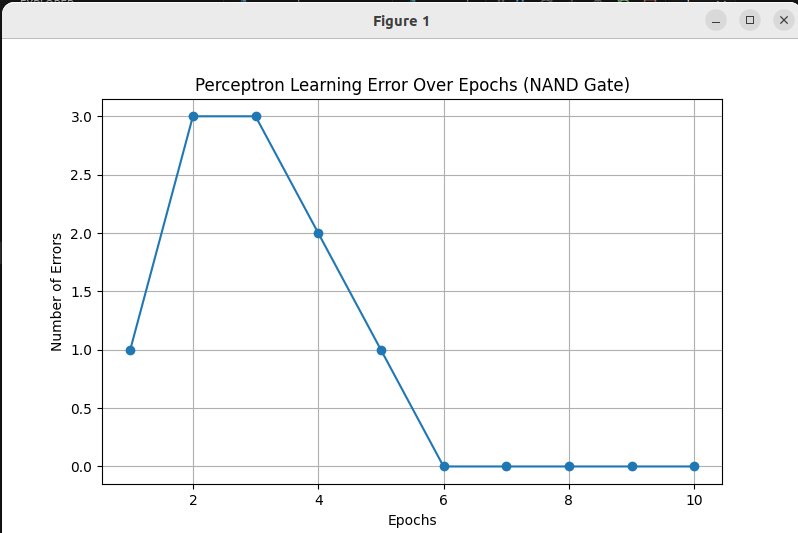

📝 Perceptron NAND_GATE 실습

🔍 NAND 게이트 모델 훈련 후 결과 확인

import numpy as np

import matplotlib.pyplot as plt

class Perceptron:

def __init__(self, input_size, lr=0.1, epochs=10):

self.weights = np.zeros(input_size)

self.bias = 0

self.lr = lr

self.epochs = epochs

self.errors = []

def activation(self, x):

return np.where(x >= 0, 1, 0)

def predict(self, x):

linear_output = np.dot(x, self.weights) + self.bias

return self.activation(linear_output)

def train(self, X, y):

for epoch in range(self.epochs):

total_error = 0

for x1, target in zip(X, y):

prediction = self.predict(x1)

update = self.lr * (target - prediction)

self.weights += update * x1

self.bias += update

total_error += int(update != 0)

self.errors.append(total_error)

print(f"Epoch {epoch+1}/{self.epochs}, Errors: {total_error}")

# NAND 게이트 데이터

X_nand = np.array([[0,0],[0,1],[1,0],[1,1]])

y_nand = np.array([1,1,1,0])

# 퍼셉트론 모델 훈련

ppn_nand = Perceptron(input_size=2)

ppn_nand.train(X_nand, y_nand)

# 예측 결과 확인

print("\nNAND Gate Test:")

for x in X_nand:

print(f"Input: {x}, Predicted Output: {ppn_nand.predict(x)}")

💡 출력 결과

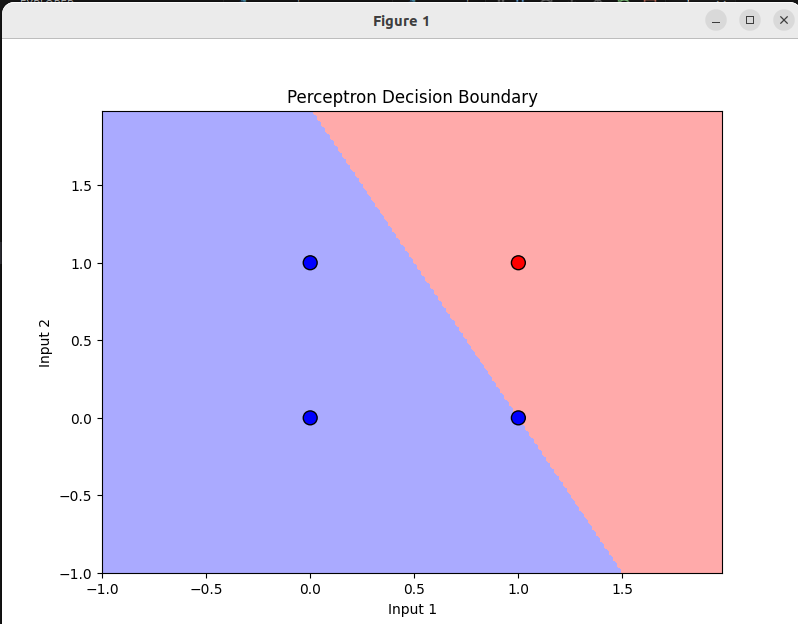

🔍 NAND 게이트 결정 경계 시각화

from matplotlib.colors import ListedColormap

def plot_decision_boundary(X, y, model):

cmap_light = ListedColormap(['#FFAAAA', '#AAAAFF'])

cmap_bold = ListedColormap(['#FF0000', '#0000FF'])

h = .02 # mesh grid 간격

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h),

np.arange(y_min, y_max, h))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.figure(figsize=(8, 6))

plt.contourf(xx, yy, Z, cmap=cmap_light)

# 실제 데이터 포인트 표시

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold,

edgecolor='k', s=100, marker='o')

plt.xlabel('Input 1')

plt.ylabel('Input 2')

plt.title('Perceptron Decision Boundary')

plt.show()

# NAND 게이트 결정 경계 시각화

plot_decision_boundary(X_nand, y_nand, ppn_nand)

💡 출력 결과

🔍 오류 시각화

plt.figure(figsize=(8, 5))

plt.plot(range(1, len(ppn_or.errors) + 1), ppn_or.errors, marker='o')

plt.xlabel('Epochs')

plt.ylabel('Number of Errors')

plt.title('Perceptron Learning Error Over Epochs (OR Gate)')

plt.grid(True)

plt.show()

💡 출력 결과

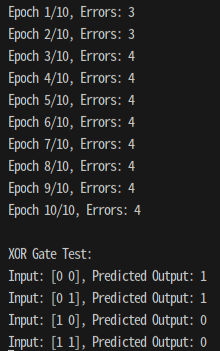

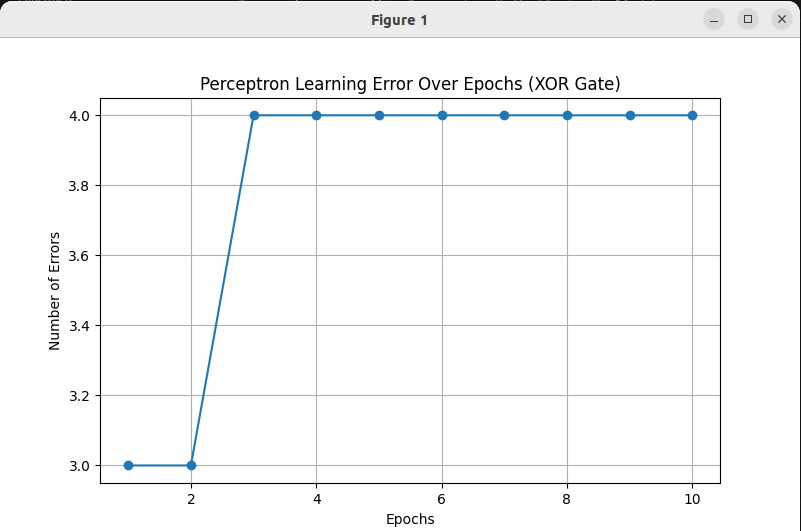

📝 Perceptron XOR_GATE 실습

🔍 XOR 게이트 모델 훈련 후 결과 확인

import numpy as np

import matplotlib.pyplot as plt

class Perceptron:

def __init__(self, input_size, lr=0.1, epochs=10):

self.weights = np.zeros(input_size)

self.bias = 0

self.lr = lr

self.epochs = epochs

self.errors = []

def activation(self, x):

return np.where(x >= 0, 1, 0)

def predict(self, x):

linear_output = np.dot(x, self.weights) + self.bias

return self.activation(linear_output)

def train(self, X, y):

for epoch in range(self.epochs):

total_error = 0

for x1, target in zip(X, y):

prediction = self.predict(x1)

update = self.lr * (target - prediction)

self.weights += update * x1

self.bias += update

total_error += int(update != 0)

self.errors.append(total_error)

print(f"Epoch {epoch+1}/{self.epochs}, Errors: {total_error}")

# XOR 게이트 데이터

X_xor = np.array([[0,0],[0,1],[1,0],[1,1]])

y_xor = np.array([0,1,1,0])

# 퍼셉트론 모델 훈련

ppn_xor = Perceptron(input_size=2)

ppn_xor.train(X_xor, y_xor)

# 예측 결과 확인

print("\nXOR Gate Test:")

for x in X_xor:

print(f"Input: {x}, Predicted Output: {ppn_xor.predict(x)}")

💡 출력 결과

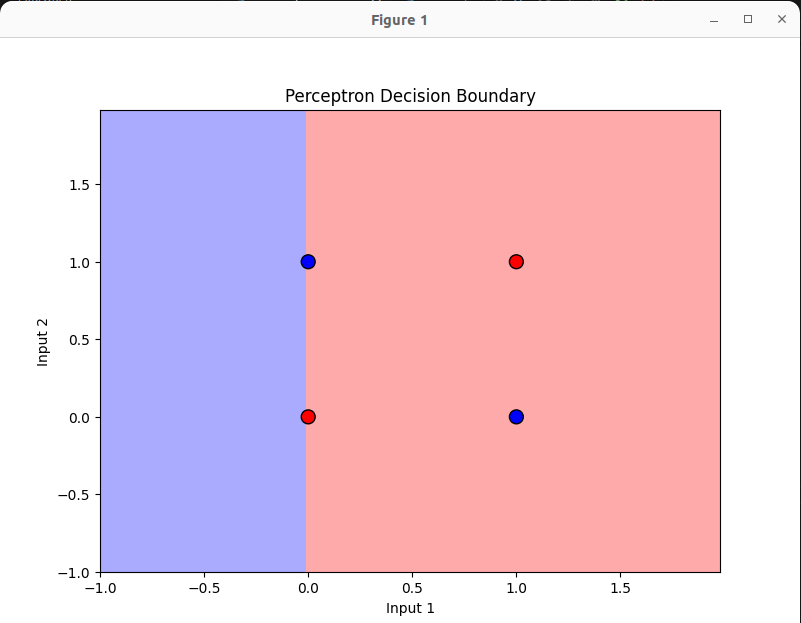

🔍 XOR 게이트 결정 경계 시각화

from matplotlib.colors import ListedColormap

def plot_decision_boundary(X, y, model):

cmap_light = ListedColormap(['#FFAAAA', '#AAAAFF'])

cmap_bold = ListedColormap(['#FF0000', '#0000FF'])

h = .02 # mesh grid 간격

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h),

np.arange(y_min, y_max, h))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.figure(figsize=(8, 6))

plt.contourf(xx, yy, Z, cmap=cmap_light)

# 실제 데이터 포인트 표시

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold,

edgecolor='k', s=100, marker='o')

plt.xlabel('Input 1')

plt.ylabel('Input 2')

plt.title('Perceptron Decision Boundary')

plt.show()

# XOR 게이트 결정 경계 시각화

plot_decision_boundary(X_xor, y_xor, ppn_xor)

💡 출력 결과

🔍 오류 시각화

plt.figure(figsize=(8, 5))

plt.plot(range(1, len(ppn_or.errors) + 1), ppn_or.errors, marker='o')

plt.xlabel('Epochs')

plt.ylabel('Number of Errors')

plt.title('Perceptron Learning Error Over Epochs (OR Gate)')

plt.grid(True)

plt.show()

💡 출력 결과

🚨 단층 Perceptron의 한계점

- XOR GATE는 퍼셉트론으로 학습이 되지 않는 문제가 발생하였다.

- 퍼셉트론은 직선 하나로 ●와 ○를 나눌 수 있어야 학습이 된다.

- 하지만 XOR은 직선 하나로는 절대 ●와 ○를 나눌 수 없다.